This article showcases a project on managing big data using MySQL Workbench, focusing on the Adventure Works dataset. The project involves constructing a relational database system and performing data analysis queries using SQL.

Data Source Link to heading

The Adventure Works dataset was obtained from Kaggle, an open-source platform that provides a large number of datasets and challenges for users to work on. This dataset consists of ten different tables stored in .csv format and includes information on 18000 customers. Both women and men are involved in purchasing the company’s products, and each customer’s data includes their personal information such as customer key, first name, last name, email address, and annual income.

The dataset also includes products and their subcategories, featuring different brands of bikes categorized into four groups: bikes, components, clothing, and accessories. The company manufactures its own components, accessories, and clothing, but the primary products of Adventure Works are bikes, including mountain, road, and touring bikes.

Additionally, the dataset contains more than 56000 sales records stored in separate tables, covering the years 2015, 2016, and 2017. Other tables hold information on returned orders and shipping territories.

Data Cleaning and Schema Setup Link to heading

After gathering the data, the first step was data cleaning to prepare the dataset for building the AdventureWorks Database Schema. Although the data was mostly cleaned, we still did a few processing steps in Excel to ensure everything was in order. These steps included:

Ensuring Correct Data Types: We double-checked that all columns had the correct data types, particularly for MySQL’s DATE format. For instance, columns containing dates were converted from the US format

MM-DD-YYYYto the standardYYYY-MM-DDformat, making it compatible with MySQL.Removing Special Characters: Special characters and symbols, like the

$sign in theAnnual Incomecolumn, were removed. This was essential to ensure that columns expected to hold integers could do so properly. This will also allow us to run statistical queries and sort the data efficiently.Ensuring Unique Primary Keys: We validated the uniqueness of primary keys. For example, we found some duplicate

OrderNumbersin the sales tables, which would prevent us from using the column as a primary key. In such cases, we made sure to rename or remove duplicates to maintain data integrity and avoid issues during the database creation process.

The cleaned datasets are available for download here.

Once data cleaning was completed, we moved on to creating the Adventure Works Database Schema and importing the table data. However, before importing, it was essential to define the databases and tables with the appropriate data types.

The SQL queries for defining the database and its entities are available here. We used data types such as DATE for date fields, VARCHAR for variable character fields, and INT and DECIMAL for numerical data.

/*CREATE THE DATABASE AdventureWorks*/

DROP DATABASE IF EXISTS adventureworks;

CREATE DATABASE AdventureWorks;

/*Use Database*/

USE AdventureWorks;

/* Table structure for the calendar */

DROP TABLE IF EXISTS `calendar`;

CREATE TABLE `calendar` (

`OrderDate` DATE NOT NULL,

PRIMARY KEY (`OrderDate`)

);

/* Table structure for the customers */

DROP TABLE IF EXISTS `customers`;

CREATE TABLE `customers` (

`CustomerKey` INT NOT NULL,

`Prefix` VARCHAR(100) NOT NULL,

`FirstName` VARCHAR(100) NOT NULL,

`LastName` VARCHAR(100) NOT NULL,

`BirthDate` DATE NOT NULL,

`MaritalStatus` VARCHAR(100) NOT NULL,

`Gender` VARCHAR(100) NOT NULL,

`EmailAddress` VARCHAR(100) NOT NULL,

`AnnualIncome` INT NOT NULL,

`TotalChildren` INT NOT NULL,

`EducationLevel` VARCHAR(100) NOT NULL,

`Occupation` VARCHAR(100) NOT NULL,

`HomeOwner` VARCHAR(100) NOT NULL,

PRIMARY KEY (`CustomerKey`)

);

/* Table structure for product categories */

DROP TABLE IF EXISTS `product_categories`;

CREATE TABLE `product_categories` (

`ProductCategoryKey` INT NOT NULL,

`CategoryName` VARCHAR(50) DEFAULT NULL,

PRIMARY KEY (`ProductCategoryKey`)

);

/* Table structure for product subcategories */

DROP TABLE IF EXISTS `product_subcategories`;

CREATE TABLE `product_subcategories` (

`ProductSubcategoryKey` INT NOT NULL,

`SubcategoryName` VARCHAR(100) NOT NULL,

`ProductCategoryKey` INT NOT NULL,

PRIMARY KEY (`ProductSubcategoryKey`),

KEY `ProductCategoryKey` (`ProductCategoryKey`),

CONSTRAINT `product_subcategories_ibfk_1` FOREIGN KEY (`ProductCategoryKey`) REFERENCES `product_categories` (`ProductCategoryKey`)

);

/* Table structure for products */

DROP TABLE IF EXISTS `products`;

CREATE TABLE `products` (

`ProductKey` INT NOT NULL,

`ProductSubcategoryKey` INT NOT NULL,

`ProductSKU` VARCHAR(100) NOT NULL,

`ProductName` VARCHAR(100) NOT NULL,

`ModelName` VARCHAR(100) NOT NULL,

`ProductDescription` VARCHAR(250) NOT NULL,

`ProductColor` VARCHAR(100) NOT NULL,

`ProductSize` VARCHAR(100) NOT NULL,

`ProductStyle` VARCHAR(100) NOT NULL,

`ProductCost` DECIMAL(10,4) NOT NULL,

`ProductPrice` DECIMAL(10,4) NOT NULL,

PRIMARY KEY (`ProductKey`),

KEY `ProductSubcategoryKey` (`ProductSubcategoryKey`),

CONSTRAINT `products_ibfk_1` FOREIGN KEY (`ProductSubcategoryKey`) REFERENCES `product_subcategories` (`ProductSubcategoryKey`)

);

/* Table structure for territories */

DROP TABLE IF EXISTS `territories`;

CREATE TABLE `territories` (

`TerritoryKey` INT NOT NULL,

`Region` VARCHAR(100) NOT NULL,

`Country` VARCHAR(100) NOT NULL,

`Continent` VARCHAR(100) NOT NULL,

PRIMARY KEY (`TerritoryKey`)

);

/* Table structure for returns */

DROP TABLE IF EXISTS `returns`;

CREATE TABLE `returns` (

`ReturnDate` DATE NOT NULL,

`TerritoryKey` INT NOT NULL,

`ProductKey` INT NOT NULL,

`ReturnQuantity` INT NOT NULL,

KEY `ProductKey` (`ProductKey`),

KEY `TerritoryKey` (`TerritoryKey`),

CONSTRAINT `returns_ibfk_1` FOREIGN KEY (`ProductKey`) REFERENCES `products` (`ProductKey`),

CONSTRAINT `returns_ibfk_2` FOREIGN KEY (`TerritoryKey`) REFERENCES `territories` (`TerritoryKey`)

);

/* Table structure for sales in 2015 */

DROP TABLE IF EXISTS `sales_2015`;

CREATE TABLE `sales_2015` (

`OrderDate` DATE NOT NULL,

`StockDate` DATE NOT NULL,

`OrderNumber` VARCHAR(100) NOT NULL,

`ProductKey` INT NOT NULL,

`CustomerKey` INT NOT NULL,

`TerritoryKey` INT NOT NULL,

`OrderLineItem` INT NOT NULL,

`OrderQuantity` INT NOT NULL,

PRIMARY KEY (`OrderNumber`),

KEY `ProductKey` (`ProductKey`),

KEY `CustomerKey` (`CustomerKey`),

KEY `TerritoryKey` (`TerritoryKey`),

KEY `OrderDate` (`OrderDate`),

CONSTRAINT `sales_2015_ibfk_1` FOREIGN KEY (`ProductKey`) REFERENCES `products` (`ProductKey`),

CONSTRAINT `sales_2015_ibfk_2` FOREIGN KEY (`CustomerKey`) REFERENCES `customers` (`CustomerKey`),

CONSTRAINT `sales_2015_ibfk_3` FOREIGN KEY (`TerritoryKey`) REFERENCES `territories` (`TerritoryKey`),

CONSTRAINT `sales_2015_ibfk_4` FOREIGN KEY (`OrderDate`) REFERENCES `calendar` (`OrderDate`)

);

/* Table structure for sales in 2016 */

DROP TABLE IF EXISTS `sales_2016`;

CREATE TABLE `sales_2016` (

`OrderDate` DATE NOT NULL,

`StockDate` DATE NOT NULL,

`OrderNumber` VARCHAR(100) NOT NULL,

`ProductKey` INT NOT NULL,

`CustomerKey` INT NOT NULL,

`TerritoryKey` INT NOT NULL,

`OrderLineItem` INT NOT NULL,

`OrderQuantity` INT NOT NULL,

PRIMARY KEY (`OrderNumber`),

KEY `ProductKey` (`ProductKey`),

KEY `CustomerKey` (`CustomerKey`),

KEY `TerritoryKey` (`TerritoryKey`),

KEY `OrderDate` (`OrderDate`),

CONSTRAINT `sales_2016_ibfk_1` FOREIGN KEY (`ProductKey`) REFERENCES `products` (`ProductKey`),

CONSTRAINT `sales_2016_ibfk_2` FOREIGN KEY (`CustomerKey`) REFERENCES `customers` (`CustomerKey`),

CONSTRAINT `sales_2016_ibfk_3` FOREIGN KEY (`TerritoryKey`) REFERENCES `territories` (`TerritoryKey`),

CONSTRAINT `sales_2016_ibfk_4` FOREIGN KEY (`OrderDate`) REFERENCES `calendar` (`OrderDate`)

);

/* Table structure for sales in 2017 */

DROP TABLE IF EXISTS `sales_2017`;

CREATE TABLE `sales_2017` (

`OrderDate` DATE NOT NULL,

`StockDate` DATE NOT NULL,

`OrderNumber` VARCHAR(100) NOT NULL,

`ProductKey` INT NOT NULL,

`CustomerKey` INT NOT NULL,

`TerritoryKey` INT NOT NULL,

`OrderLineItem` INT NOT NULL,

`OrderQuantity` INT NOT NULL,

PRIMARY KEY (`OrderNumber`),

KEY `ProductKey` (`ProductKey`),

KEY `CustomerKey` (`CustomerKey`),

KEY `TerritoryKey` (`TerritoryKey`),

KEY `OrderDate` (`OrderDate`),

CONSTRAINT `sales_2017_ibfk_1` FOREIGN KEY (`ProductKey`) REFERENCES `products` (`ProductKey`),

CONSTRAINT `sales_2017_ibfk_2` FOREIGN KEY (`CustomerKey`) REFERENCES `customers` (`CustomerKey`),

CONSTRAINT `sales_2017_ibfk_3` FOREIGN KEY (`TerritoryKey`) REFERENCES `territories` (`TerritoryKey`),

CONSTRAINT `sales_2017_ibfk_4` FOREIGN KEY (`OrderDate`) REFERENCES `calendar` (`OrderDate`)

);

Database Setup and Import with Python Link to heading

There are various methods for importing data into MySQL Workbench, such as the MySQL Workbench Data Import Wizard, which supports CSV and JSON files. However, this approach can be extremely slow and may fail due to the large volume of data or errors. As an alternative, the Load Data Infile statement can be used for handling huge datasets more efficiently. Converting CSV files to JSON format might also streamline this process.

However, an even more flexible and automated approach is to use Python with the MySQLdb library. This method allows for better control over the import process, ensuring faster and more reliable bulk data transfers. In this section, I will guide you through a Python class designed to automate the database setup and data import, significantly reducing the manual effort required for handling large datasets.

To follow along, you’ll need to have MySQL installed on your system. For Linux users, you can check out this short blog for installation instructions.

Below is a Python script that sets up the Adventure Works database schema and imports data from CSV files into MySQL tables.

from tqdm import tqdm

import csv

import MySQLdb

class MySQLDatabaseManager:

def __init__(self, db_config):

self.db_config = db_config

def Setup(self, script_path):

"""

Executes an SQL script to set up the database and tables

"""

conn = MySQLdb.connect(

host=self.db_config["host"],

user=self.db_config["user"],

password=self.db_config["password"],

port=self.db_config.get("port", 3306)

)

cursor = conn.cursor()

cursor.execute(f"DROP DATABASE IF EXISTS {self.db_config['database']}")

cursor.execute(f"CREATE DATABASE IF NOT EXISTS {self.db_config['database']}")

cursor.execute(f"USE {self.db_config['database']}")

with open(script_path, 'r') as file:

sql_script = file.read()

for statement in sql_script.split(';'):

if statement.strip():

cursor.execute(statement)

conn.commit()

print(f"{db_config['database']} Database schema was setup successfully!")

cursor.close()

conn.close()

def Importer(self, csv_file_path, table_name):

conn = MySQLdb.connect(

host=self.db_config.get("host"),

user=self.db_config.get("user"),

password=self.db_config.get("password"),

database=self.db_config.get("database"),

port=self.db_config.get("port", 3306)

)

cursor = conn.cursor()

with open(csv_file_path, 'r', encoding='ISO-8859-1') as csv_file:

csv_data = csv.DictReader(csv_file)

columns = csv_data.fieldnames

column_placeholders = ', '.join(['%s'] * len(columns))

sql = f"""

INSERT INTO {table_name} ({', '.join(columns)})

VALUES ({column_placeholders})

"""

print(f'Importing the CSV file: {csv_file_path}')

for row in tqdm(csv_data):

values = [row.get(column) for column in columns]

cursor.execute(sql, values)

conn.commit()

cursor.close()

conn.close()

# Example usage

if __name__ == "__main__":

db_config = {

"host": "127.0.0.1",

"user": "your_username",

"password": "your_password",

"database": "adventureworks",

"port": 3306

}

db_manager = MySQLDatabaseManager(db_config)

db_manager.Setup('rdbms/schema.sql')

db_manager.Importer('data/AdventureWorks_Customers.csv', 'customers')

db_manager.Importer('data/AdventureWorks_Calendar.csv', 'calendar')

db_manager.Importer('data/AdventureWorks_Product_Categories.csv', 'product_categories')

db_manager.Importer('data/AdventureWorks_Product_Subcategories.csv', 'product_subcategories')

db_manager.Importer('data/AdventureWorks_Products.csv', 'products')

db_manager.Importer('data/AdventureWorks_Territories.csv', 'territories')

db_manager.Importer('data/AdventureWorks_Returns.csv', 'returns')

db_manager.Importer('data/AdventureWorks_Sales_2015.csv', 'sales_2015')

db_manager.Importer('data/AdventureWorks_Sales_2016.csv', 'sales_2016')

db_manager.Importer('data/AdventureWorks_Sales_2017.csv', 'sales_2017')

This Python script automates two key processes:

- setup(): Executes an SQL script to create the Adventure Works database schema, ensuring all tables are properly defined before importing data.

- importer(): Populates the tables with data from CSV files. It dynamically reads the column names from each CSV file and maps them to the corresponding table in MySQL.

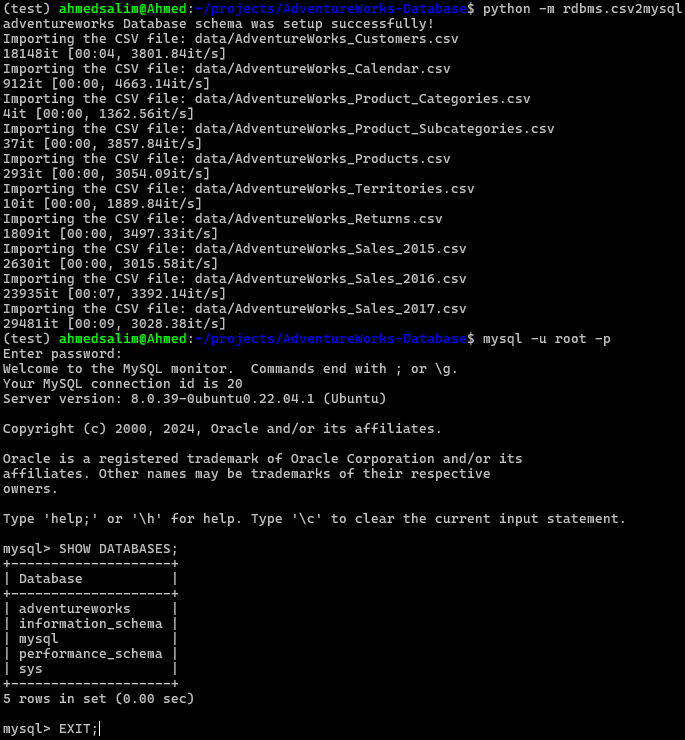

The tqdm library enhances the user experience by displaying a progress bar during the import process, especially useful for large datasets, the output of the script is shown below:

Output from MySQLDatabaseManager class

cd rdbms/

mysqldump --skip-extended-insert --compact -u root -p adventureworks > DUMP_adventureworks.sql

MySQL Workbench Link to heading

After creating the database, we can access it using tools like MySQL Workbench, phpMyAdmin, or others. For this project, we utilized MySQL Workbench to analyze our SQL queries effectively.

Entity Relationship Diagram (ERD) Link to heading

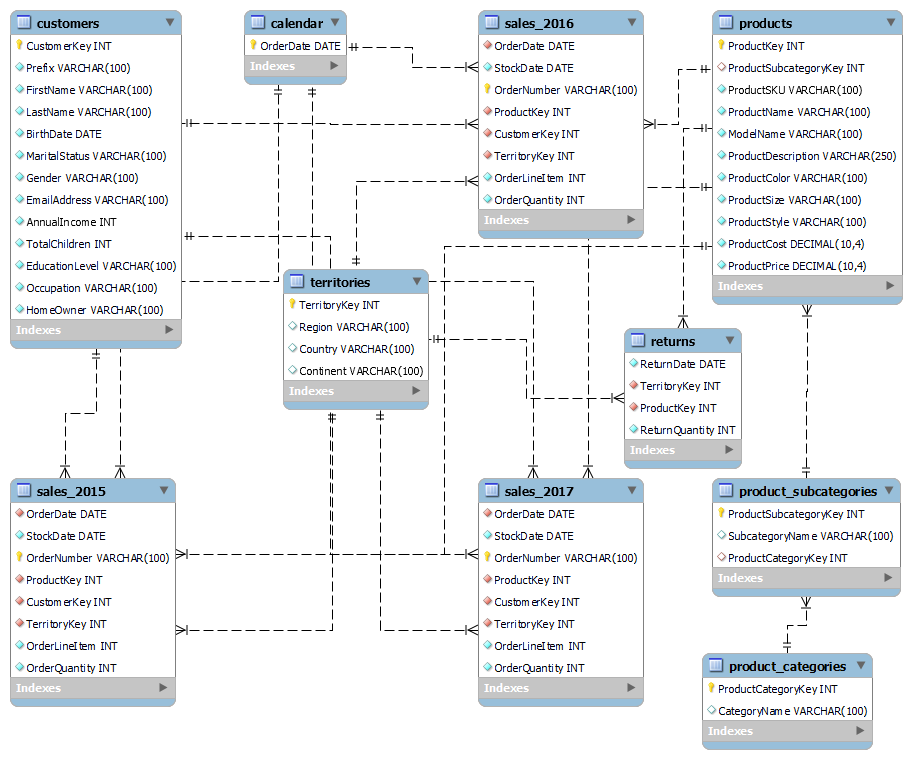

The schema of the Adventure Works Database incorporates various attributes that define the relationships among different entities. The ERD below visualizes these relationships, offering a comprehensive view of the database structure. It illustrates the relational schema, which consists of ten tables: customers , products , product_subcategories , product_categories , calendar , territories , sales_2015 , sales_2016 , sales_2017 , and returns .

In the ERD:

- Primary Keys (PK) are represented by a golden key symbol.

- Foreign Keys (FK) are depicted as a red rhombus.

Entity Relationship Diagram

Statistical & Analysis queries Link to heading

To gain deeper insights into the dataset, we performed several statistical and analysis queries. These queries help us understand various aspects of the data, such as counts, averages, medians, and means. Below are examples of the queries used for our analysis:

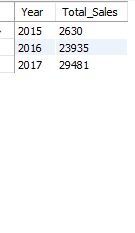

-- 1/ COUNT all sales in each year

SELECT '2015' AS Year, COUNT(*) AS Total_Sales FROM sales_2015

UNION ALL

SELECT '2016' AS Year, COUNT(*) AS Total_Sales FROM sales_2016

UNION ALL

SELECT '2017' AS Year, COUNT(*) AS Total_Sales FROM sales_2017;

staistical queries 1

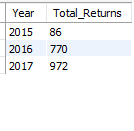

-- 2/ MAX return quantity in each year

SELECT '2015' AS Year, SUM(ReturnQuantity) AS Total_Returns FROM returns

WHERE ReturnDate BETWEEN '2015-01-01' AND '2015-12-31'

UNION ALL

SELECT '2016' AS Year, SUM(ReturnQuantity) AS Total_Returns FROM returns

WHERE ReturnDate BETWEEN '2016-01-01' AND '2016-12-31'

UNION ALL

SELECT '2017' AS Year, SUM(ReturnQuantity) AS Total_Returns FROM returns

WHERE ReturnDate BETWEEN '2017-01-01' AND '2017-12-31';

staistical queries 2

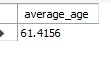

-- 3/ Calculate the average age of all customers

SELECT AVG(EXTRACT( YEAR FROM DATE('2023-01-17')) - (EXTRACT(YEAR FROM BirthDate))) AS average_age

FROM customers;

staistical queries 3

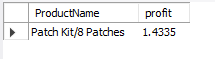

-- 4/ Find minimum product profit

SELECT products.ProductName, ProductPrice - ProductCost as profit

FROM products

WHERE (ProductPrice - ProductCost) = (SELECT MIN(ProductPrice - ProductCost) FROM products);

staistical queries 4

Data Analysis SQL Queries Link to heading

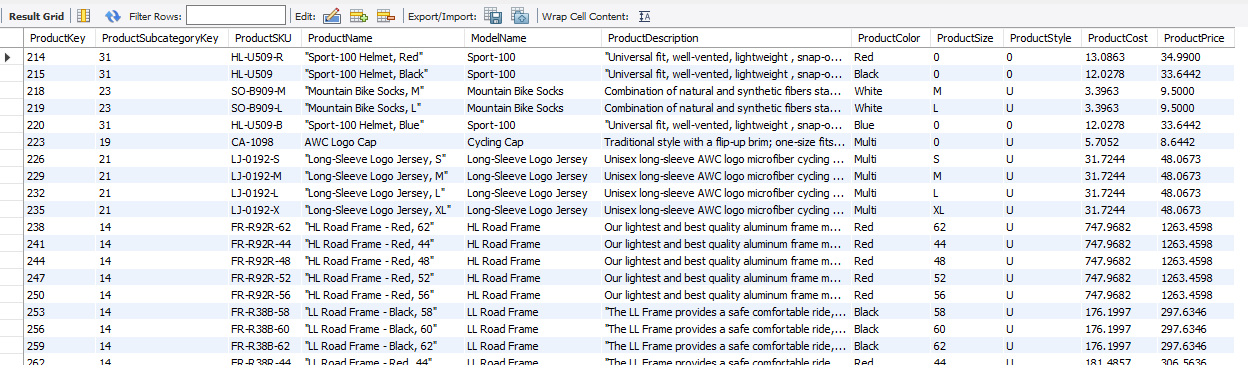

Question 1: Find all the products and identify them by their unique key values in ascending order.

SELECT * FROM products ORDER BY ProductKey ASC;

Output from Question 1

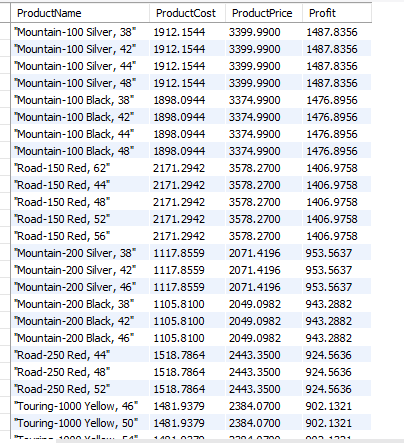

Question 2: Find all the products profit and identify them by their names in ascending order.

SELECT ProductName, ProductCost, ProductPrice,

ProductPrice-ProductCost AS Profit

FROM products ORDER BY profit DESC;

Output from Question 2

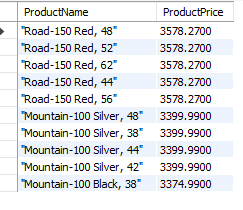

Question 3: Find the 10 most expensive products in descending order.

SELECT ProductName, ProductPrice FROM products

ORDER BY ProductPrice DESC LIMIT 10;

Output from Question 3

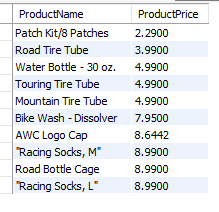

Question 4: Find the 10 cheapest products in ascending order:

SELECT ProductName, ProductPrice FROM products

ORDER BY ProductPrice ASC LIMIT 10;

Output from Question 4

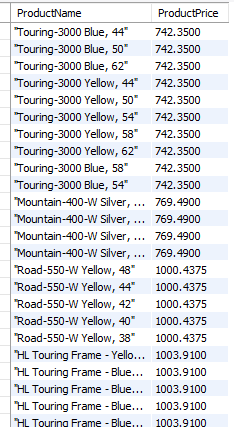

Question 5: Find the average price from products and products greater than the average:

SELECT ProductName, ProductPrice FROM products

HAVING ProductPrice > (SELECT

AVG(ProductPrice) FROM products)

ORDER BY ProductPrice ASC;

Output from Question 5

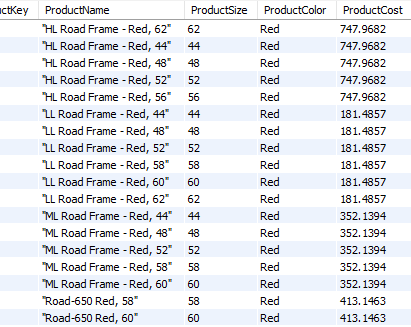

Question 6: List all products whose size is medium, red in color and the product cost less than 800:

SELECT ProductKey, ProductName,

ProductSize,

ProductColor, ProductCost

FROM products

WHERE ProductSize > 20

AND ProductColor='red'

AND ProductCost < 800;

Output from Question 6

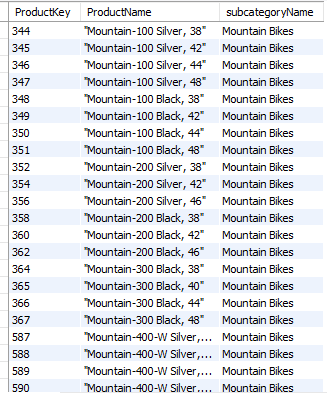

Question 7: List all products based on subcategories:

SELECT ProductKey, ProductName, subcategoryName

FROM products

JOIN product_subcategories

ON products.ProductSubcategoryKey

=product_subcategories.ProductSubcategoryKey;

Output from Question 7

Question 8: List all customers who owns house by gender by DESC order of Annual Income:

SELECT gender, FirstName, LastName,

AnnualIncome, HomeOwner

FROM customers

WHERE HomeOwner = 'Y'

ORDER BY AnnualIncome DESC;

Output from Question 8

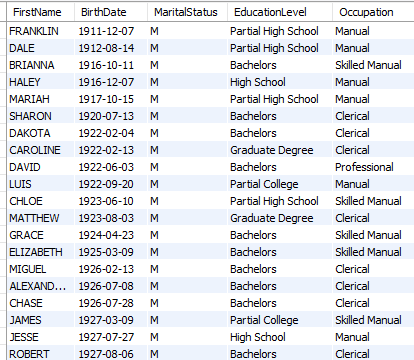

Question 9: Find married customers that own a house and their occupation by ascending order of birth date:

SELECT FirstName,BirthDate, MaritalStatus,

EducationLevel, Occupation

FROM customers

WHERE MaritalStatus= 'm'

AND HomeOwner ='Y'

ORDER BY BirthDate ASC;

Output from Question 9

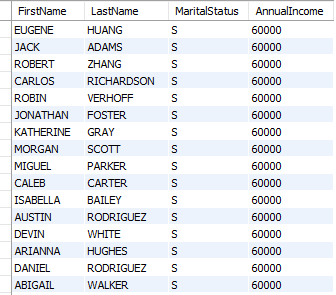

Question 10: Find customers that are single and whose annual income is greater than 50, 000 in ascending order:

SELECT FirstName, LastName,

MaritalStatus, AnnualIncome

FROM customers

WHERE MaritalStatus='s' AND

AnnualIncome > 50000

ORDER BY AnnualIncome ASC;

Output from Question 10

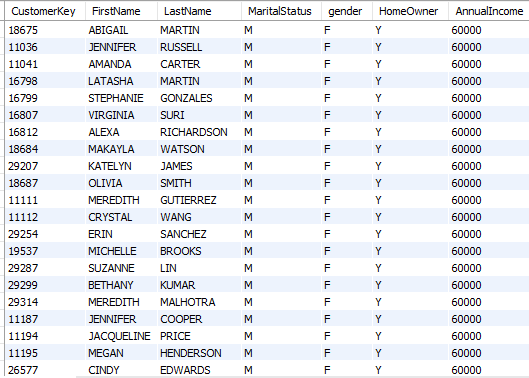

Question 11: Among the female customers who are married, find the ones that have houses and their annual income is greater than average income:

SELECT CustomerKey, FirstName,

LastName, MaritalStatus,gender, HomeOwner, AnnualIncome

FROM customers

WHERE MaritalStatus = 'M'

AND gender = 'F'

AND HomeOwner='Y'

AND AnnualIncome > (select avg(AnnualIncome)from customers)

ORDER BY AnnualIncome;

Output from Question 11

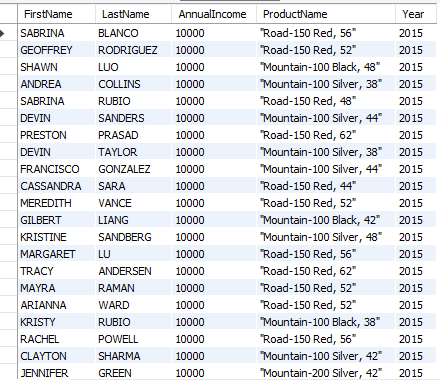

Question 12: List all the customers that their annual income is less than 20, 000 and bought products in 2015:

SELECT FirstName, LastName,

AnnualIncome, ProductName,

YEAR(OrderDate) AS Year

FROM sales_2015

JOIN products ON sales_2015.ProductKey = products.ProductKey

JOIN customers ON sales_2015.CustomerKey = customers.CustomerKey

HAVING AnnualIncome < 20000;

Output from Question 12

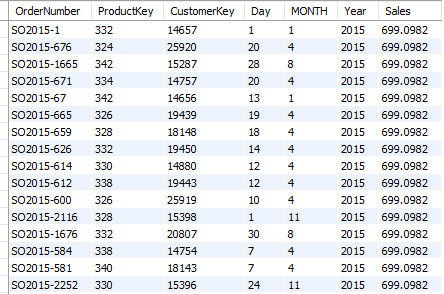

Question 13: List all sales from 2015 in ascending order by order Number, product key and customer Key and in day/month/year format:

SELECT OrderNumber,products.ProductKey,

customers.CustomerKey,DAY(OrderDate) AS Day,

MONTH(OrderDate) AS MONTH, YEAR(OrderDate) AS Year,

sales_2015.OrderQuantity * products.ProductPrice AS Sales

from sales_2015 join products

ON sales_2015.ProductKey = products.ProductKey

JOIN customers ON

sales_2015.CustomerKey = customers.CustomerKey

ORDER BY Sales ASC;

Output from Question 13

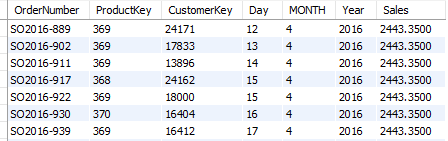

Question 14: List all sales from 2016 order by orderNumber and in day/month/year format:

SELECT OrderNumber,products.ProductKey,

customers.CustomerKey,DAY(OrderDate) AS Day,

MONTH(OrderDate) AS MONTH, YEAR(OrderDate) AS Year,

sales_2016.OrderQuantity * products.ProductPrice AS Sales

from sales_2016 join products

ON sales_2016.ProductKey = products.ProductKey

JOIN customers ON

sales_2016.CustomerKey = customers.CustomerKey

ORDER BY Sales DESC;

Output from Question 14

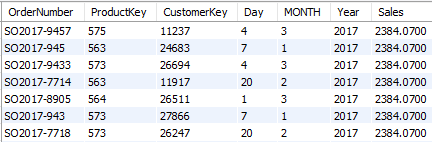

Question 15: List all sales from 2017 order by orderNumber and in day/month/year format:

SELECT OrderNumber,products.ProductKey,

customers.CustomerKey,DAY(OrderDate) AS Day,

MONTH(OrderDate) AS MONTH, YEAR(OrderDate) AS Year,

sales_2017.OrderQuantity * products.ProductPrice AS Sales

from sales_2017 join products

ON sales_2017.ProductKey = products.ProductKey

JOIN customers ON

sales_2017.CustomerKey = customers.CustomerKey

ORDER BY Sales DESC;

Output from Question 15

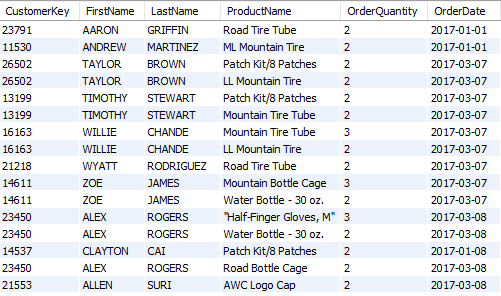

Question 16: List all the customers that purchased the most sold products in the year that has higher sales 2017:

SELECT customers.CustomerKey,

FirstName, LastName,

ProductName, OrderQuantity, OrderDate

FROM sales_2017

JOIN customers ON

sales_2017.CustomerKey = customers.CustomerKey

JOIN products ON

sales_2017.ProductKey = products.ProductKey

WHERE OrderQuantity > (SELECT AVG(OrderQuantity)

FROM sales_2017);

Output from Question 16

Question 17: Count the products that purchased the same item in 2016:

SELECT count(*) as quantity_sold, ProductName

FROM sales_2016

JOIN customers ON sales_2016.CustomerKey = customers.CustomerKey

JOIN products ON sales_2016.ProductKey = products.ProductKey

GROUP BY ProductName

ORDER BY quantity_sold DESC;

Output from Question 17

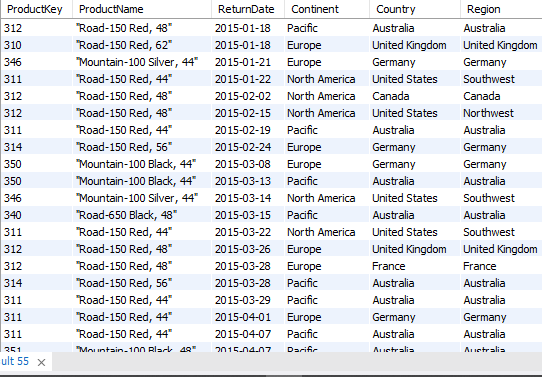

Question 18: List all products that have been returned based on continent, country and region and order by the return date:

SELECT products.ProductKey,

ProductName,ReturnDate,

Continent, Country, Region

FROM returns

JOIN products ON

returns.ProductKey = products.ProductKey

JOIN territories ON

returns.TerritoryKey = territories.TerritoryKey

ORDER BY ReturnDate;

Output from Question 18

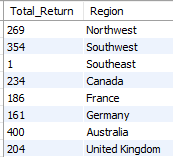

Question 19: Count the returned products group by region:

SELECT count(*) AS Total_Return, Region

FROM returns

JOIN territories ON

returns.TerritoryKey = territories.TerritoryKey

GROUP BY region;

Output from Question 19

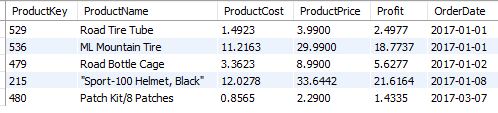

Question 20: Find out the profit of the top 5 products for 2017:

SELECT products.ProductKey, ProductName,ProductCost,

ProductPrice, ProductPrice - ProductCost AS Profit, OrderDate

FROM sales_2017

JOIN products ON sales_2017.ProductKey = products.ProductKey

LIMIT 5;

Output from Question 20

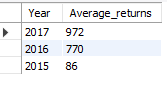

Question 21: Find the average returns in each year:

SELECT '2017' AS Year, AVG(ReturnQuantity) AS Average_returns FROM returns

WHERE ReturnDate BETWEEN '2017-01-01' AND '2017-12-31'

UNION ALL

SELECT '2016' AS Year, AVG(ReturnQuantity) AS Average_returns FROM returns

WHERE ReturnDate BETWEEN '2016-01-01' AND '2016-12-31'

UNION ALL

SELECT '2015' AS Year, AVG(ReturnQuantity) AS Average_returns FROM returns

WHERE ReturnDate BETWEEN '2015-01-01' AND '2015-12-31';

Output from Question 21

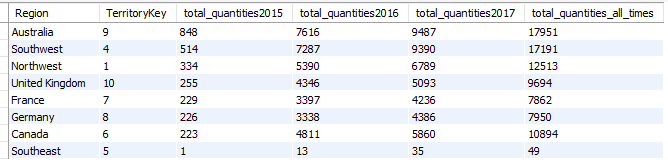

Question 22: Find the total quantities orded in each year and at all times within each region:

WITH cte2015 AS (

SELECT Region, territories.TerritoryKey, territories.Country, SUM(OrderQuantity) as total_quantity

FROM territories

JOIN sales_2015

ON territories.TerritoryKey = sales_2015.TerritoryKey

GROUP BY Region, territories.Country, territories.TerritoryKey

), cte2016 AS (

SELECT Region, territories.TerritoryKey, territories.Country, SUM(OrderQuantity) as total_quantity

FROM territories

JOIN sales_2016

ON territories.TerritoryKey = sales_2016.TerritoryKey

GROUP BY Region, territories.Country, territories.TerritoryKey

), cte2017 AS (

SELECT Region, territories.TerritoryKey, territories.Country, SUM(OrderQuantity) as total_quantity

FROM territories

JOIN sales_2017

ON territories.TerritoryKey = sales_2017.TerritoryKey

GROUP BY Region, territories.Country, territories.TerritoryKey

), cte_all_times AS (

SELECT Region, territories.TerritoryKey, territories.Country, SUM(OrderQuantity) as total_quantity

FROM territories

JOIN (SELECT * FROM sales_2015

UNION ALL

SELECT * FROM sales_2016

UNION ALL

SELECT * FROM sales_2017) s

ON territories.TerritoryKey = s.TerritoryKey

GROUP BY Region, territories.Country, territories.TerritoryKey

)

SELECT cte2015.Region, cte2015.TerritoryKey,

MAX(cte2015.total_quantity) as total_quantities2015,

MAX(cte2016.total_quantity) as total_quantities2016,

MAX(cte2017.total_quantity) as total_quantities2017,

MAX(cte_all_times.total_quantity) as total_quantities_all_times

FROM cte2015

JOIN cte2016

ON cte2015.Region = cte2016.Region and cte2015.TerritoryKey = cte2016.TerritoryKey

JOIN cte2017

ON cte2016.Region = cte2017.Region and cte2016.TerritoryKey = cte2017.TerritoryKey

JOIN cte_all_times

ON cte2017.Region = cte_all_times.Region and cte2017.TerritoryKey = cte_all_times.TerritoryKey

GROUP BY cte2015.Region, cte2015.TerritoryKey

ORDER BY total_quantities2015 DESC;

Output from Question 22